MSLE (Mean Squared Logarithmic Error) and MSE (Mean Squared Error) are both loss functions that you can use in regression problems. But when should you use what metric?

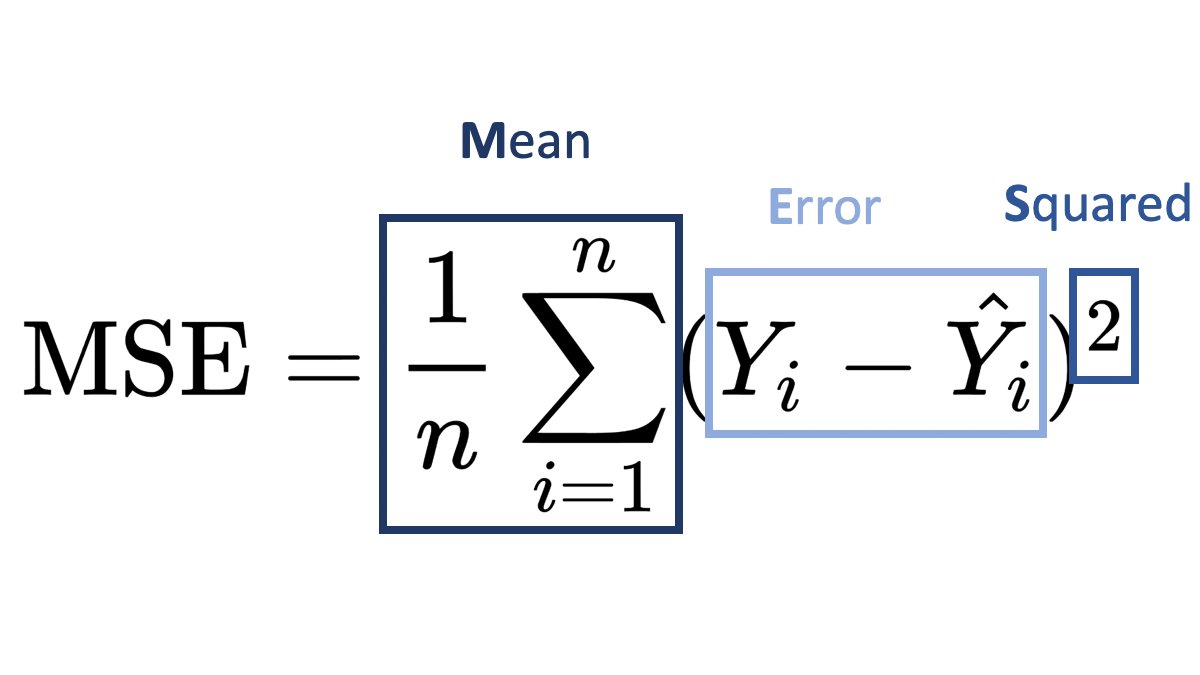

Mean Squared Error (MSE):

It is useful when your target has a normal or normal-like distribution, as it is sensitive to outliers.

An example is below –

In this case using MSE as your loss function makes much more sense than MSLE.

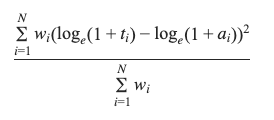

Mean Squared Logarithmic Error (MSLE):

- MSLE measures the average squared logarithmic difference between the predicted and actual values.

- MSLE treats smaller errors as less significant than larger ones due to the logarithmic transformation.

- It is less sensitive to outliers than MSE since the logarithmic transformation compresses the error values.

An example where you can use MSLE –

Here if you use MSE then due to the exponential nature of the target, it will be sensitive to outliers and MSLE is a better metric, remember that MSLE cannot be used for optimisation, it is only an evaluation metric.

In general, the choice between MSLE and MSE depends on the nature of the problem, the distribution of errors, and the desired behavior of the model. It’s often a good idea to experiment with both and evaluate their performance using appropriate evaluation metrics before finalizing the choice.

Leave a comment