Deep Learning has revolutionized various sectors lately. One critical component of this revolution is the emergence of the Deep Cross Network (DCN). DCN is a novel type of neural network that significantly deviates from the traditional feed-forward networks to offer more robust and efficient solutions. This article aims to provide an in-depth understanding of the Deep Cross Network, its differences from the traditional feed-forward networks, and the areas of its application.

Understanding Deep Cross Network (DCN)

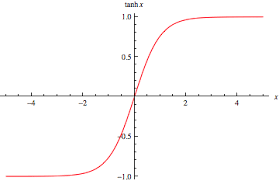

The Deep Cross Network (DCN) is a sophisticated hybrid model that combines the strengths of deep neural networks (DNNs) and feature crossing. It was introduced to handle high-dimensional sparse data more efficiently. It’s a mix of deep learning for non-linear input-output mappings and feature crossing for capturing some form of interaction between the feature dimensions.

The core idea behind DCN is to apply explicit and efficient feature crossing in an input space. This is done by using a cross network that applies a cross operation on the input features to learn explicit bounded-degree feature interactions, which is then combined with a deep network that models arbitrary interactions.

How DCN Differs From Feed-Forward Networks

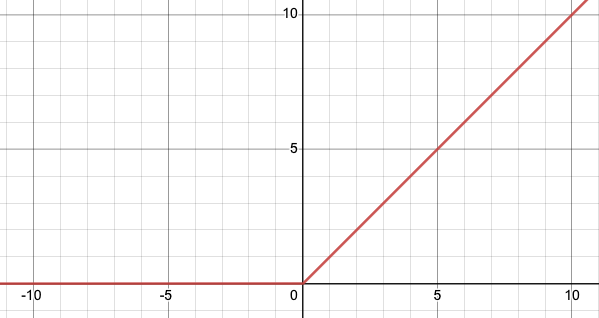

Feed-forward networks or Multilayer Perceptrons (MLPs) are the simplest type of artificial neural network. In these networks, data moves in one direction—from the input layer, through the hidden layers, and finally to the output layer. There is no looping or cycling back of data.

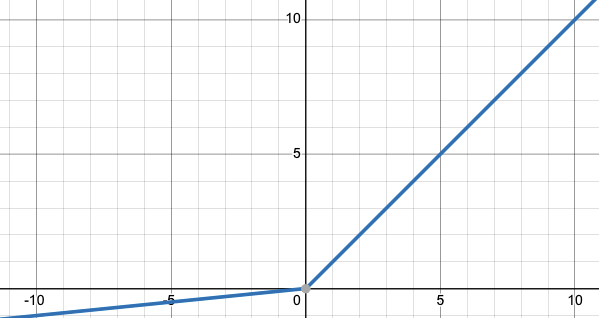

DCN, on the other hand, doesn’t strictly adhere to this one-way flow. Instead, it allows the explicit feature crossing, which essentially enables the model to learn certain feature interactions and feed them back into the model. This combination of explicit and bounded-degree feature crossing with deep networks gives DCN its unique strength.

Another notable difference lies in the complexity and efficiency of the two models. While feed-forward networks can become computationally expensive and complex as they increase in size and depth, DCN manages to handle high-dimension sparse input effectively and efficiently, thanks to its unique architecture.

Another distinguishing feature is the ability of DCN to model feature interactions. While standard feed-forward networks can struggle to capture intricate feature interactions without substantial depth, DCN excels at learning both low- and high-order feature interactions effectively.

Applications of Deep Cross Network

The Deep Cross Network has numerous applications across various domains. Some of the most prevalent are:

- Recommendation Systems: DCN can effectively handle high-dimensional data and capture complex feature interactions, making it suitable for recommendation systems. It can model the interactions between users and items efficiently to provide accurate recommendations.

- Advertisement Click Prediction: DCN’s ability to capture high-order feature interactions makes it a perfect fit for predicting advertisement clicks. By understanding the intricate relationships between user behavior, ad characteristics, and context, it can predict the likelihood of a user clicking on an ad.

- Fraud Detection: In banking and finance, DCN can be used for fraud detection by effectively modeling the complex relationships between various transactions.

- Natural Language Processing: DCN can also be applied to various NLP tasks, such as sentiment analysis or text classification, where it can learn effective feature interactions from high-dimensional text data.

Conclusion

The Deep Cross Network is a significant breakthrough in the field of deep learning. Its unique combination of deep networks and feature crossing distinguishes it from traditional feed-forward networks and makes it a powerful tool for handling high-dimensional sparse data.

Let me know in the comments if you want to go over an application of Deep Cross Networks using an example dataset.