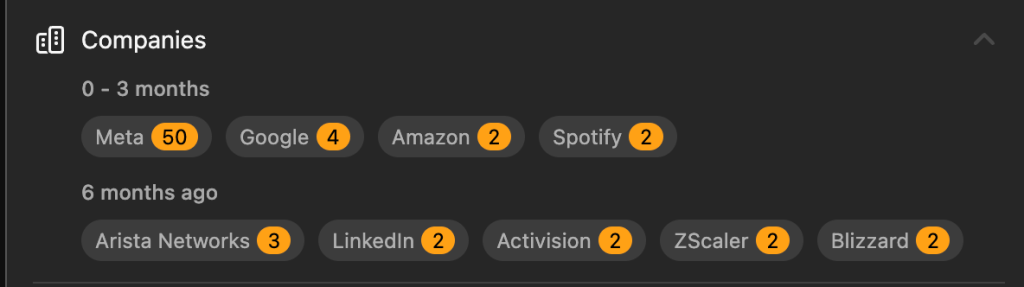

This is a premium problem on LeetCode and has also been asked by Meta as of the date of publishing almost 50 times.

The problem statement is pretty simple, given an incoming integer stream, you have to calculate the simple moving average. The size or the window is also given.

class MovingAverage:

def __init__(self, size: int):

def next(self, val: int) -> float:

# Your MovingAverage object will be instantiated and called as such:

# obj = MovingAverage(size)

# param_1 = obj.next(val)

Now a naive solution would be to initialise a data structure like an array and keep appending elements which are given in the next method and then compute the average of the last n elements, n being the size, every time.

from statistics import mean

class MovingAverage:

def __init__(self, size: int):

self.size = size

self.nums = []

def next(self, val: int) -> float:

self.nums.append(val)

return mean(self.nums[-self.size:len(self.nums)])

Now this will work, but there are two issues here. One we’re storing elements not required, meaning we only need to store the latest numbers till the dimension of size. Also, there is a lot of repeated work here, where we’re calculating the mean every time. Making this solution O(N) in time complexity and also O(N) in space complexity.

We can improve on both aspects. First, we will use a queue to store our numbers. The reason for doing so is that once the size of the queue becomes equal to our window size. We will pop elements from the left and add the numbers on the right. To do this efficiently, we will use the deque data structure in python.

We will also create a variable called sum, where we will track the running sum. This will allow us to compute the moving average in O(1) time complexity.

from collections import deque

class MovingAverage:

def __init__(self, size: int):

self.size = size

self.sum = 0

self.vals = deque()

def next(self, val: int) -> float:

if len(self.vals) >= self.size:

self.sum -= self.vals.popleft()

self.sum+=val

self.vals.append(val)

return self.sum/len(self.vals)

Here, you can see that we keep track of what the size of our self.vals is. If it exceeds the window size, we pop from the left and decrease our running sum.

No matter what the size is, we always increment our sum and also append the number to our queue.

There might be some minor improvements, but in terms of time and space complexity, this is the most optimal solution.

Let me know if you found this solution helpful. I’ll be posting more LeetCode solutions on the blog as I feel they are also an integral component to cracking Machine Learning jobs, especially at Meta, Google etc.